Just the facts, ma’am

I was in the middle of nowhere earlier today, Lancashire to be precise. When I eventually got a data signal on my phone, I was surprised to see that on Google Maps, the place I was staying also had my reservation dates next to it. “What the hell”, I was thinking. Not that I was up to anything (I was with my wife, so I have witnesses and an alibi), but I didn’t realise my data was being shared. It was then I remembered some of the things we’ve been covering in the DITA lectures and Lab sessions, from information retrieval to APIs and leading up to a brief introduction to technologies that would help enable a semantic web.

I was trying to think of an appropriate title for this week’s blog post and whilst watching a short video by Rob Gonzalez about the semantic web being “links between facts” (see video), the thought of the often repeated phrase came to my head. Once again, we have a phrase that is actually a misquote as it was never actually said throughout the original television series of Dragnet, but I’ll come back to that a little later.

Gonzalez went on to say, using diagrams from Tim Berners-Lee, that if we think of Web 1.0 being about documents (that is to say, the use of hyperlinks to connect documents). Web 2.0 is applications, from Twitter to Gmail “and they go beyond just the data that you’re storing on the computers” (again, from the same video). And because the information is not shared with each different company, the data is not connected with each other, so in some cases, if you have a change in your details, you could end up having to go on each account and updating the same information. Web 3.0, in Gonzalez’s words, is about “connecting data at a lower level”, so that “specific data elements can be referenced between documents”.

I was originally hoping to show a cartoon from The Far Side for this post, but after reading an online note from its creator Gary Larson about his concerns, I decided not to use the image and I hope my description will suffice in this case:

A man (looking as if though he’s not the brightest guy on the block) stands in his yard holding a giant paint brush. All around him are painted labels “Shirt”, “Pants”, “The House”, “The Dog”, etc. The cartoon ends with the caption “Now! … That should clear up a few things around here!”

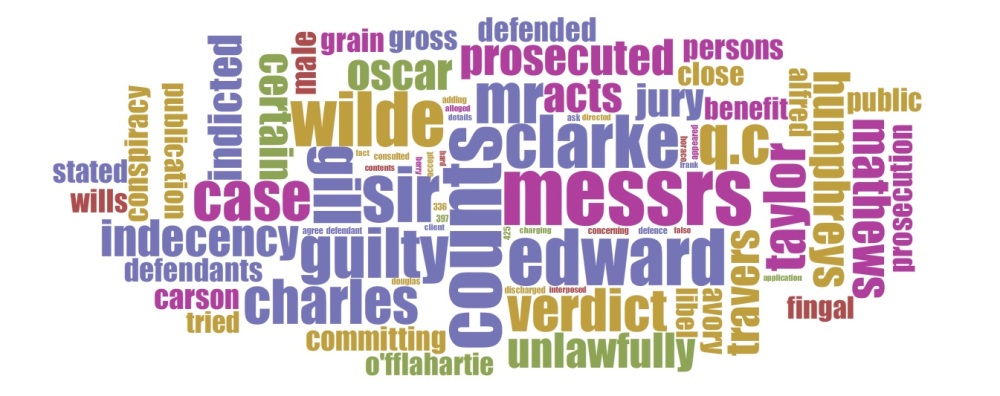

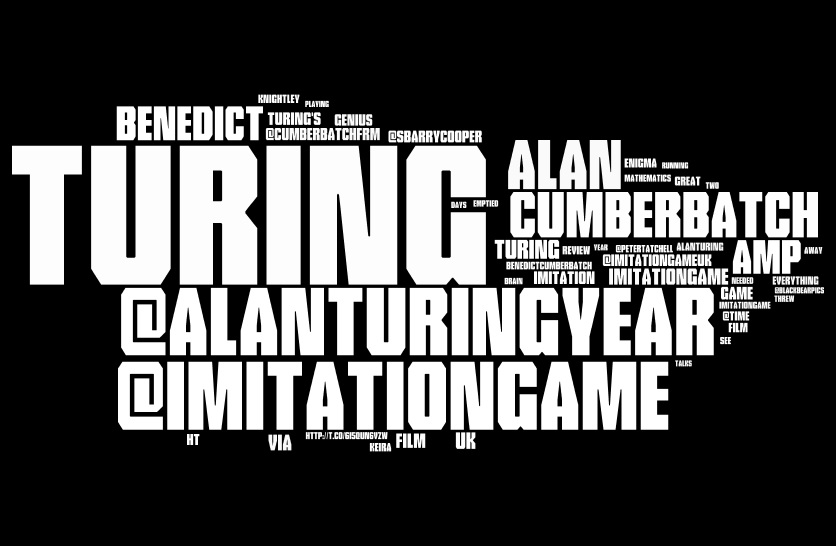

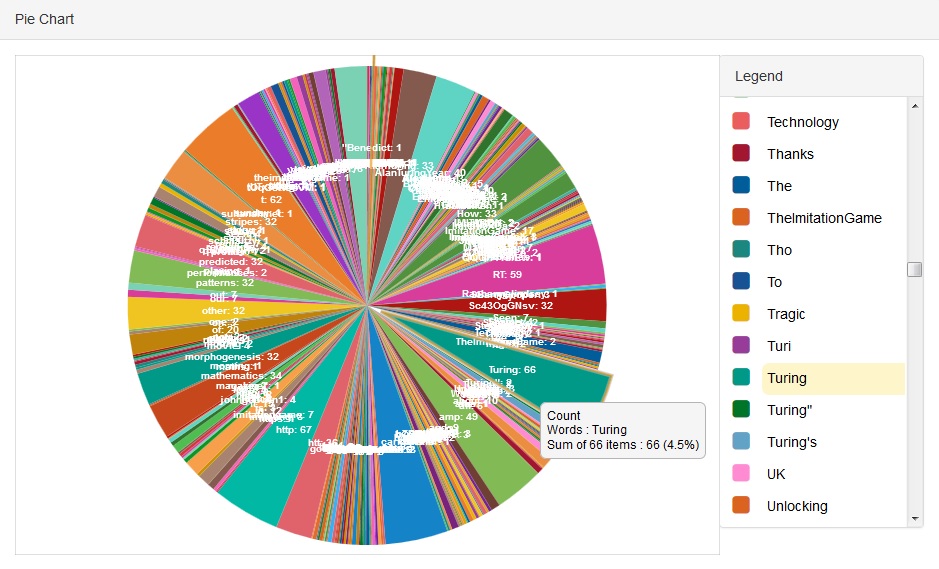

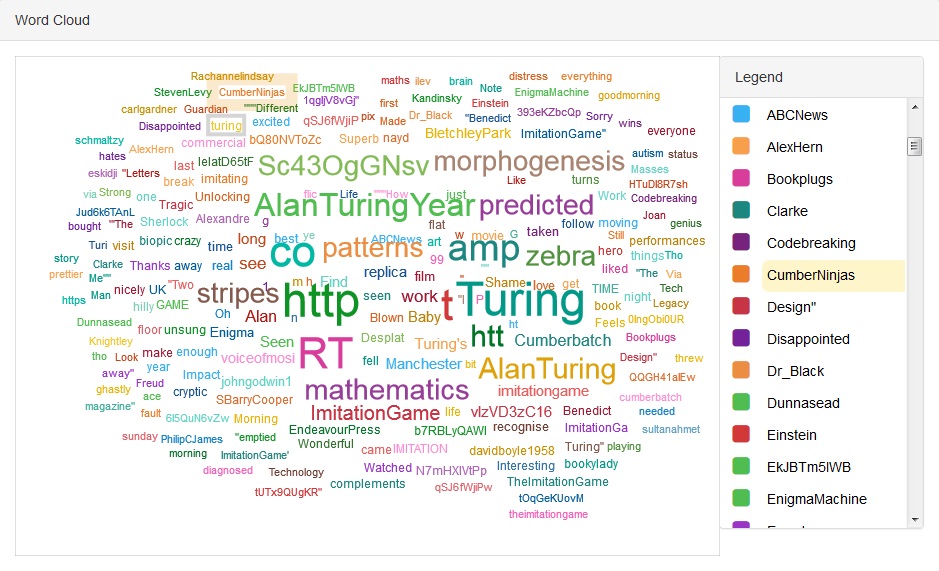

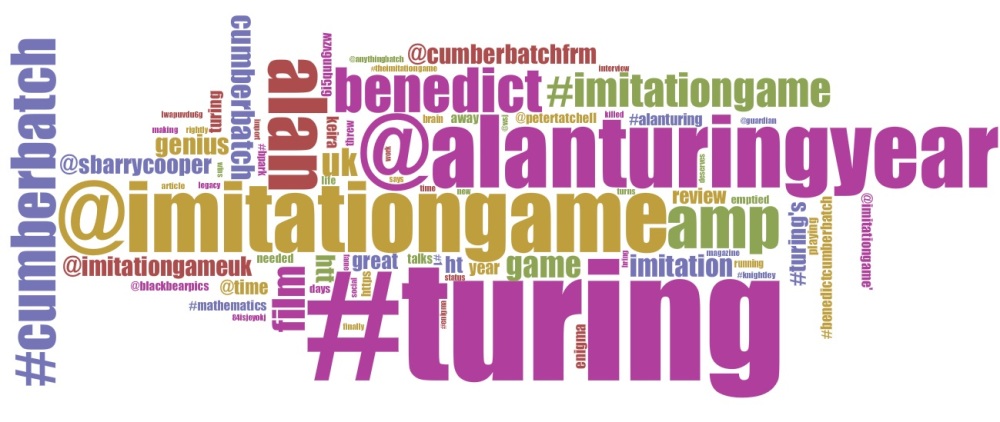

The cartoon was reproduced on a Cambridge Semantics webpage and it made me laugh not because it was the first time I saw it, but it resonated with the feelings I had when I first started looking into the ideas behind semantic web technologies, namely, the idea of tagging everything in XML. I read with interest that there are people trying out Natural-Language Processing technologies, that would attempt to extract the facts that are mentioned within a text (see Semantic Web vs. Semantic Technologies by Feigenbaum), but the difficulties faced mirrored some of the issues found exporting text to be analysed through the word cloud comparisons a few weeks back. Where the difference in punctuation in non-European languages meant that a program not designed to read it was unable to make an accurate word count.

Coming back to thoughts about copyright issues with The Far Side, I wondered whether that could be an issue with a web that links between facts. The World Wide Web Consortium website had a FAQ section that gave an answer that wasn’t quite an answer regarding data cached as a result of an integration process. It basically said that as a semantic web wouldn’t be that different fundamentally to the web we have now, the problems we have now would be more or less the same problems faced with 3.0.

I’ll finish this brief post on the semantic web going back to thought about facts. When is a fact not a fact, or when is a non-fact a fact? Now, if the original television series never said the words that everyone is now misquoting (so much so that it has become a common cultural reference), it becomes a fact because people are applying the social codes within the system of viewer/broadcaster relationship (regardless of whether they have misheard, overheard or heard it in passing like me, having never watched an episode of Dragnet in my life). I have a feeling people might be switching off by now, so I’ll end by saying that I’ve had a lot of fun writing these posts and this is by no means the last thinking on the blog.